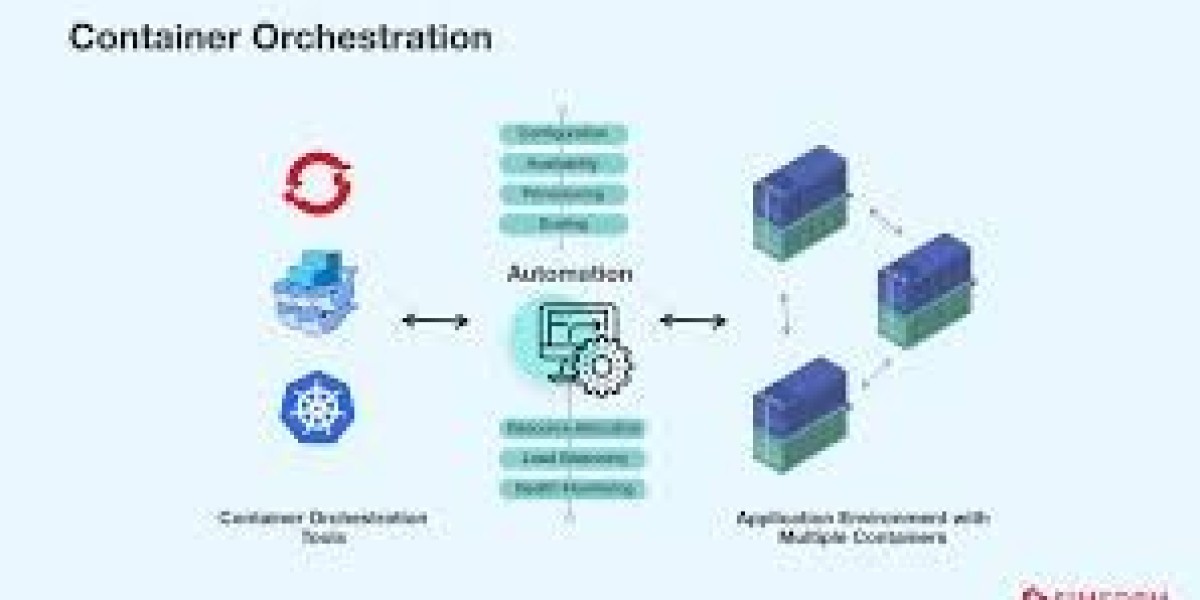

While building the software containerization of applications and their dependencies has been the go-to approach for the modern-day developer. But with the evolution of applications, the demand for multifarious deployment has grown which has posed a challenge of managing an excessive amount of containers. This is where Kubernetes comes into the play. More often than this is referred to as a container orchestration which solves the complexity to deploy, maintain and even expand Kubernetes based applications.

In this beginner's guide, we are going to focus on what Kubernetes is, how does it work and how does it enable smooth functioning of containerized applications. This article is suitable for absolute beginners with prior knowledge of containers and Kubernetes as it can help clear some of their basic queries surrounding informatics.

What is Kubernetes - Why is everyone so excited about it?

As the name Kabutero is but a shortened phrase for K8s and its an open-sourced program that handles the deployment, scaling, and maintenance activities of containerized programs. It was primarily invented by Google and presently gets regulated by the CNCF. So Kabutero is an exceptional means to control large scale containerized apps particularly in a multi-hop cloud structure.

I began by finding a storage solution more suitable for my Linux environment and containers. The choice was clear in the end: I would implement a distributed storage solution that was NFS compliant.

Kubernetes doesn’t just run containers – they manage how they run, however, they ensure proper execution between each container and the resources. It deals with critical functionalities like automatic scaling, load balancing and self healing which are essential from the business perspective when dealing with containerized applications.

Why Do You Need Kubernetes?

The future applications will steadily become more and more complex, thus, difficult to manage. Docker squeezes all complexity out of containerization with its ability to run packaged applications while Kubernetes helps with running containers in big quantities at the same time. Let’s take a look at some of the ways Kubernetes is crucial:

Container Orchestration: There is always the risk that as applications extend, managing the containers one by one can be cumbersome. Kubernetes automates deployment of operating containers on a number of machines and controls the manner in which they are scheduled for use, thus containers can be replicated and deployed across a series of nodes in a coordinated manner.

Scalability: There is no need to worry about over-or under-provisioning when using Kubernetes as it can automatically adjust the number of active containers based on the amount of traffic the pods are getting.

High Availability and Reliability: Kubernetes efficiently watches over container health and can automatically perform restarts or replacements which focus on ensuring maximum application up-time.

Resource Efficiency: Kubernetes helps in the more efficient use of the resources by managing the placement of the containers in the readily available nodes which helps in getting more performance at reduced costs.

Key Components of Kubernetes

Even before having a more detailed understanding of how Kubernetes operates, one has to become well acquainted with its key components:

1. Pod

The concept of the pod is basic. It is a kubernetes object the size of a container and can contain one or more containers. The networking and storage resources are the same for all the containers in a pod allowing them to communicate with each other easily. The pod is used to contain one or more applications but usually is only used to run a singular application’s replicated instance. But even though ideally, it is a singular application instance, a pod can have multiple containers that cooperate intricately.

2. Node

A node is a basic kubernetes object that runs containers but can be either physical or virtual machines. What a node essentially does is, it gets managed by a kubernetes control plate that comes with the node itself. A container can not simply run without a kubelet or a container runtime which explains why every node comes equipped with all of the necessary resources to commence the deployment.

3. Cluster

In order to be able to run these containerized applications, a kubernetes cluster is formed as a set of nodes. Clusters usually have a control panel as the master node to supervise their activities or closely monitor the worker nodes in order to fully understand the functions performed on them. With a single master node in control, the worker nodes can only run the specific containers assigned to them by the control panel. The primary function that a master node serves is that it manages the existing or future containers to make sure all the worker nodes are operating at peak performance.

4. Deployment

Deployment is a high level object that allows you to operate a group which consists of identical pods. Deployment contains a description of an application, which includes how many instances of a pod Employment should run, and Kubernetes will ensure that the actual state of the application is always equal to that definition. In addition, if a p0d crashes or if it is manually killed, Kubernetes will manage to do a pod replacement by spinning up a new instance of the pod.

5. Service

A service is a logical abstraction that encapsulates a group of pods and a means of using them. Services make it possible for pods that are housed in a cluster to communicate as well as allow external users to access the applications deployed within the cluster. Kubernetes implements a number of service types including ClusterIP, NodePort, LoadBalancer designed to make applications visible in various ways.

6. ConfigMap and Secrets

For the purpose of configuration management, Kubernetes’s built-in primitives ConfigMaps and Secrets are used. ConfigMaps contain pole information that is not sensitive and does not contain passwords such as configuration files, environment variables and command-line arguments. Secrets are designed to contain specific types of information such as password tokens certificates with all of this data being encrypted.

Working Mechanism Of Kubernetes

Kubernetes simplifies the complex task of running and maintaining containers by distributing it into smaller tasks. This is how it works at a bird’s eye view:

Desired State: A state that we want for the application like the number of instances or which container needs to run in which pod has to be defined by the user in Kubernetes. After defining these states, Kubernetes checks if the approved state matches the required state; should there be any discrepancy, adjustments would be made to ensure that the two states are identical. This entails that all conditions regardless of their nature or impact will be recalibrated without human intervention.

Control Plane and Worker Nodes: The first is in charge of controlling the processes associated with the cluster including managing timers, load-balancing and cluster monitoring while the second executes all commands including running the loaded applications and commands.

The Kubernetes scheduler oversees which controller nodes can run the tasks that are executed across multiple pods. Some criteria such as node resource supply, demand and life support the decision making process by being observed by the league of nodes.

The Kubernetes cluster does not require operational capability enhancement, it takes care of that by deploying new containers if demand exceeds capacity; moreover, the spread of active traffic across multiple pods further improves response times and availability if an incoming request is received.

Self-healing capabilities: Kubernetes can monitor the state of the nodes and the containers that run within the pods. If a container or pod goes down, Kubernetes can make sure the application is always up and running by restarting the pod or container or deploying a new one.

Pros of Kubernetes

Now that you are familiar with the basic concepts and the operations of Kubernetes, let’s look at some of the advantages that it poses to developers and businesses.

1. Less Complicated Container Management

When large number of containers are involved, it becomes overwhelming and quite inefficient for applications to deploy, scale and monitor the containers. While dealing with many deployments across a distributed environment, Kubernetes helps reduce the amount of manual container management tasks, as it automates simplifications like scaling.

2. Better Utilization of Resources

Kubernetes automatically links containers to corresponding nodes, which allows it to freely optimally use resources by associatively assigning containers. On top of it, features like resource requests and limits are also available which allow containers to not overuse their resources.

3. Higher Uptime for Applications

Kubernetes managerial features coupled with kernel containers don’t allow any abrupt downtime for applications even if containers across multiple kernels do fail. Since pods and containers are self-healing, they do not need any manual intervention and can be restarted by Kubernetes for seamless user experience.

4. Uncomplicated Rollout and Rollback

Kubernetes eliminates any issues when upgrading workloads, rollback can be issued in case one of the update commands concerns problems. The deployment can take a long time, but there is no scrubbing, as it features a process called rolling updates, and makes the task of replacing pods or any other components much simpler.

5. Expandable

This is aided by the fact that Kubernetes makes it easy to publish new releases, and that scaling throughout life cycles is made possible through manual nonlinear operations. As a result, the systems can withstand automatic scaling through the movement or addition of pods relative to the quantity of resources requested by Kubernetes.

Kubernetes For Beginners

If you’ve never worked with Kubernetes, the first step is to complete these tasks:A Kubernetes-specific start means moving on to one of the lightweight local Kubernetes implementations, be it Minikube or Docker Desktop or the like that fit a better development environment.

Comprehend Kubectl: kubectl is the command-line utility used for accessing the Kubernetes environment. In this instance, learn how to use kubectl effectively through direct command inputs such as kubectl get pods, kubectl create deployment, kubectl scale among others.

Run Your First Pod: Begin by deploying a plain, barebone-making pod that contains a container-based application, then pursue scaling it out or using a service to wind it out in other facets.

Kubernetes is a powerful platform that streamlines the management of containerized applications at scale. It handles critical tasks such as deployment, scaling, load balancing, and self-healing, which makes it indispensable for modern software development. By abstracting away the complexities of managing containers, Kubernetes enables developers to focus on building and delivering applications more efficiently and reliably.

Whether you are running a small application or managing a large-scale distributed system, Kubernetes offers the tools and flexibility needed to ensure that your containers run smoothly, regardless of the environment. As the demand for scalable, reliable applications grows, Kubernetes continues to be a central player in the world of cloud-native application development.